# A state-of-the-art search API purpose-built for agents

The Parallel Search MCP Server offers an easy to integrate, state-of-the-art, web search solution for AI agents. Built on the same search infrastructure that powers Parallel’s Task API and Search API, it demonstrates superior performance while being up to 50% cheaper than LLM-native web search implementations - establishing a new price-performance frontier for AI agent web access.

## **Rethinking web search for AI agents**

Mainstream search engines are designed for human browsing patterns - keyword queries, short snippets designed to drive clicks, and ad-optimized layouts - rather than the information-dense passages AI agents need to reason effectively.

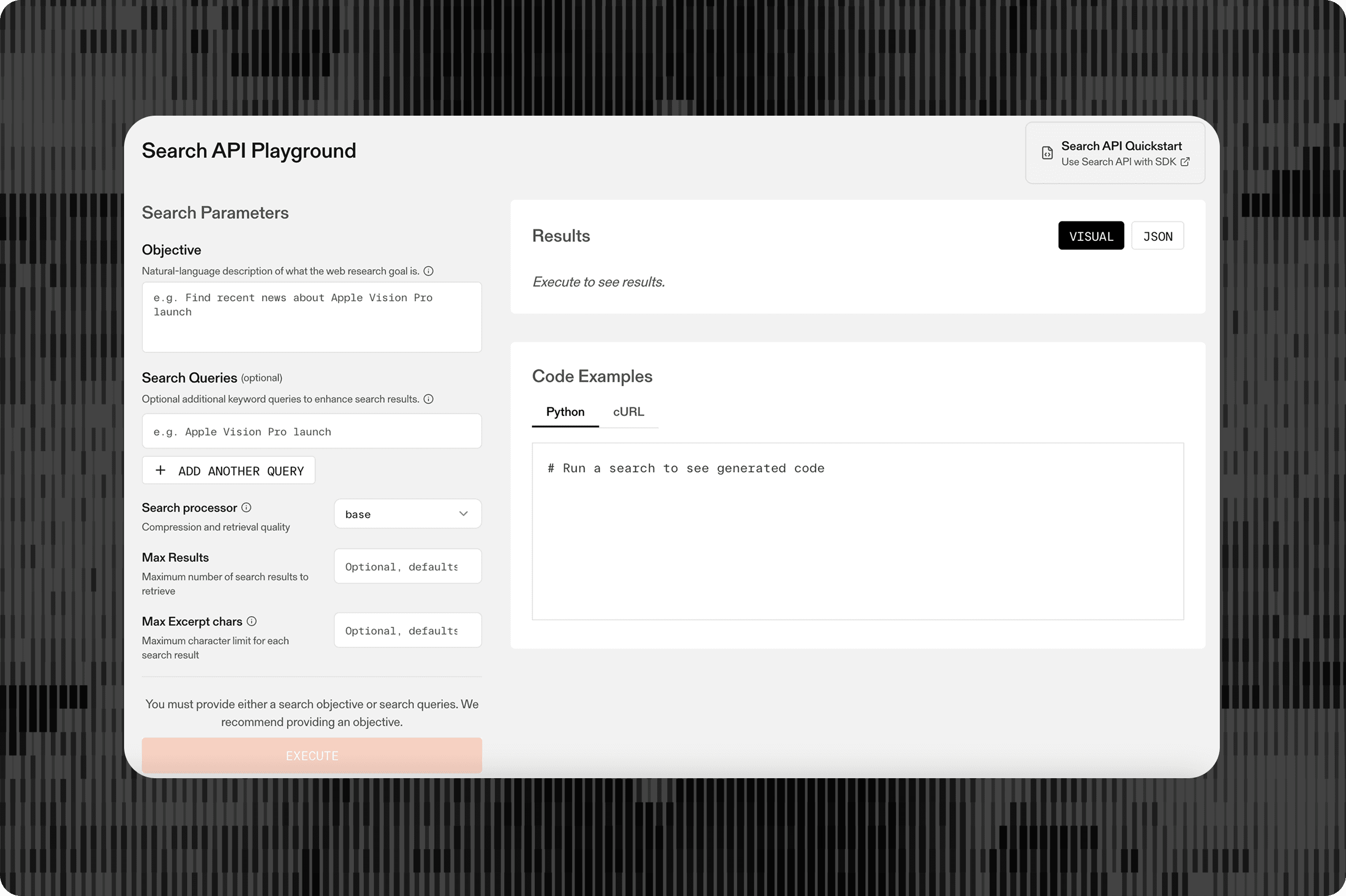

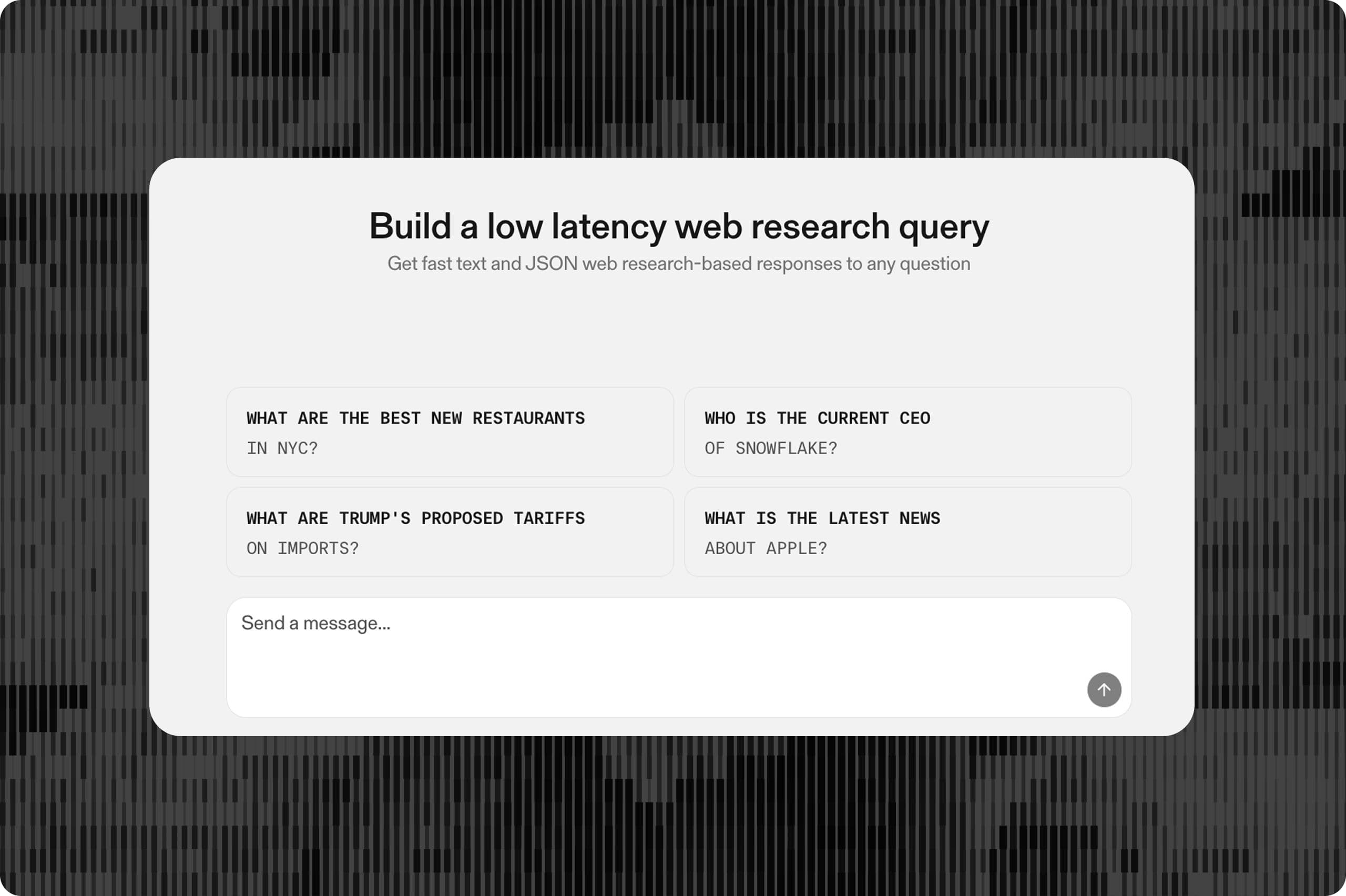

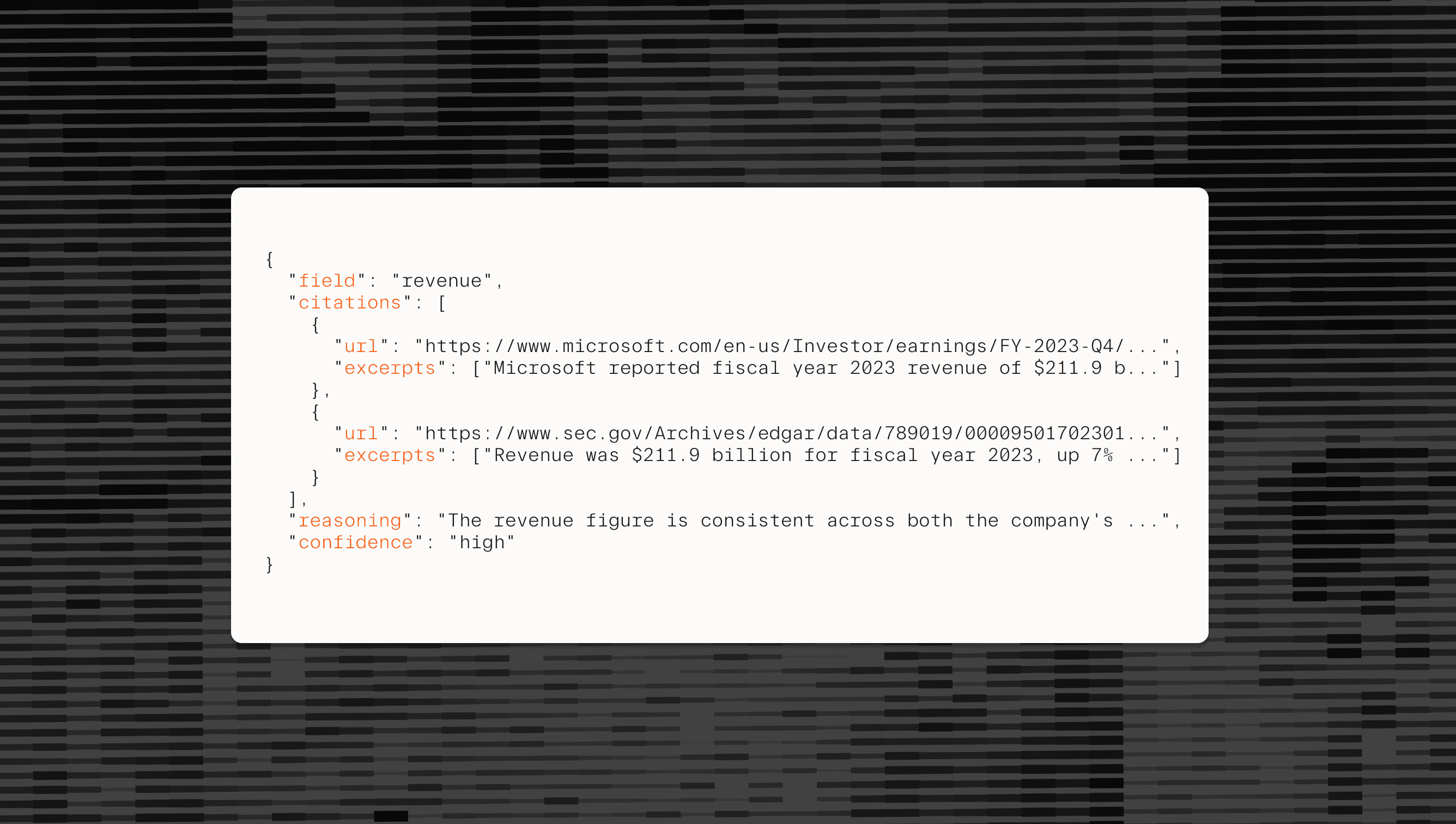

When building our higher-level Task API[Task API](/blog/parallel-task-api), we recognized this mismatch early and built our own Search API purpose-built for AI agents. The Parallel Search API accepts broader declarative task objectives beyond simple keyword queries, allowing for more complex searches. It also manages agent context by returning the most relevant dense excerpts in an LLM-friendly format, instead of incomplete snippets or full-page text. For agentic pipelines, this translates to fewer input tokens (reduced cost), better signal-to-noise to reason over (improved quality), and research that concludes in fewer steps (lower end-to-end latency).

**The result:** a simple one-shot interface for agent web access. This replaces multi-step search/scrape/extract/rerank pipelines that increase latency, inflate token costs, and introduce failure points that break agent workflows.

## **Leading performance at the lowest cost**

To evaluate real-world performance of the Parallel Search MCP Server, we created the WISER-Search benchmark which blends WISER-Fresh (queries requiring the freshest data from the web) and WISER-Atomic (hard real-world business queries). This combination reflects the challenges AI agents face in production environments across breaking news, financial data, technical documentation, and competitive intelligence.

Sample questions include:

WISER-Fresh

- - Which automaker signed today’s major chip‑supply deal with Samsung Electronics?

- - Which HR software firm did EQT agree to buy today?

- - How many shares does Firefly Aerospace plan to offer in its IPO filing?

- - Which Azerbaijani energy firm signed Ukraine’s first Transbalkan gas deal?

- - What revenue range did Audi forecast after cutting its guidance in July 2025?

WISER-Atomic

- - In fiscal year 2024, what percentage of Salesforce's subscription and support revenue came from the segment that includes its Tableau acquisition, and how does this compare to the company's overall CRM market share in 2023? Please share all of your factual findings that helped you answer the question, in your final answer.

- - According to the International Debt Statistics 2023 by the World Bank, calculate the average Foreign Direct Investment amount (in millions of USD) for Sri Lanka,Turkmenistan, and Niger in 2019. Round your answer to two decimal places.

- - Navigate to the website http://www.flightaware.com. This is the main domain for the target company. Once you are on their website, locate their careers or jobs page. Print the careers page URL.

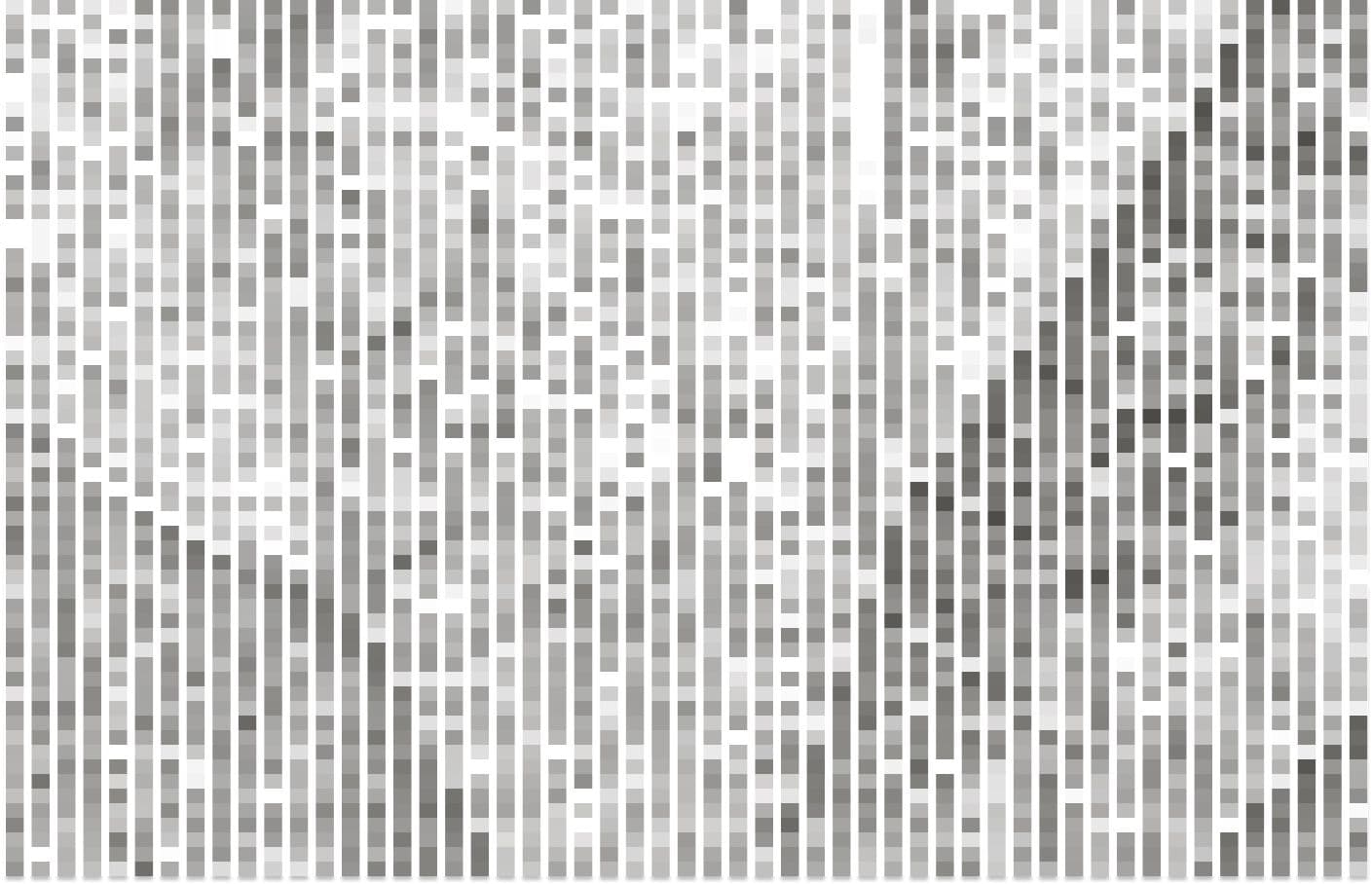

Results on the blended WISER-Search benchmark, comparing three different web search solutions (Parallel MCP server, Exa MCP server/tool calling, native web search) across four different LLMs (GPT 4.1, O4-mini, O3, Claude Sonnet 4), are shown below.

COST (CPM)

ACCURACY (%)

CPM: USD per 1000 requests. Cost is shown on a Linear scale.

### About this benchmark

This benchmark, created by Parallel, blends WISER-Fresh and WISER-Atomic. WISER-Fresh is a set of 76 queries requiring the freshest data from the web, generated by Parallel with o3 pro. WISER-Atomic is a set of 120 hard real-world business queries, based on use cases from Parallel customers.

### Distribution

40% WISER-Fresh

60% WISER-Atomic

### Search MCP Benchmark

| Series | Model | Cost (CPM) | Accuracy (%) | | --------- | -------------------------- | ---------- | ------------ | | Parallel | GPT 4.1 w/ Prll Search MCP | 21 | 74.9 | | Parallel | o4 mini / Prll Search MCP | 90 | 82.14 | | Parallel | o3 / Prll Search MCP | 192 | 80.61 | | Parallel | sonnet 4 / Prll Search MCP | 92 | 78.57 | | Native | GPT 4.1 w/ Native Search | 27 | 70 | | Native | o4 mini w/ Native Search | 190 | 77 | | Native | o3 w/ Native Search | 351 | 79.08 | | Native | sonnet 4 w/ Native Search | 122 | 68.83 | | Exa | GPT 4.1 w/ Exa Search MCP | 40 | 58.67 | | Exa | o4 mini w/ Exa Search MCP | 199 | 61.73 | | Exa | o3 w/ Exa Search MCP | 342 | 56.12 | | Exa | sonnet 4 w/ Exa Search MCP | 140 | 67.13 |

CPM: USD per 1000 requests. Cost is shown on a Linear scale.

### About this benchmark

This benchmark, created by Parallel, blends WISER-Fresh and WISER-Atomic. WISER-Fresh is a set of 76 queries requiring the freshest data from the web, generated by Parallel with o3 pro. WISER-Atomic is a set of 120 hard real-world business queries, based on use cases from Parallel customers.

### Distribution

40% WISER-Fresh

60% WISER-Atomic

**The results show that agents using Parallel Search MCP achieve superior accuracy at up to 50% lower total cost** when compared to agents using native web search implementations. Agentic workflows using the Parallel Search MCP conduct fewer tool calls and receive denser excerpts to reason on. As a result, the total cost (Search API cost + LLM cost) and latency are meaningfully reduced, while producing higher quality results.

## **Easily replace LLM native search with Parallel Search MCP**

If you're building an AI agent that needs web access, the Parallel Search MCP Server is easy to integrate with any MCP-aware LLM. Simply change one parameter and see immediate results.

Start building with state-of-the-art web search purpose-built for agents today. Get started in our Developer Platform[Developer Platform](https://platform.parallel.ai/play/search) or dive directly into Documentation[Documentation](https://docs.parallel.ai/features/remote-mcp).

## **Methodology**

**Benchmark details**: All tests were conducted on a dataset spanning real-world scenarios including breaking news, financial data, technical documentation, and competitive intelligence queries. The dataset is a combination of WISER-Fresh (76 easily verifiable questions based on events on a current day, generated by OpenAI o3 pro) and WISER-Atomic (120 questions based on real world use cases from Parallel customers).

**Evaluation**: Responses were evaluated using standardized LLM evaluators measuring accuracy against verified ground truth answers.

**Cost calculation**: Cost reflects the average cost per query across all questions run. This cost includes both the search API call and LLM token cost.

**Testing dates**: WISER-Fresh data was generated on July 28th, 2025 and testing was conducted within 24 hrs of dataset generation. WISER-Atomic testing was conducted from July 28th, 2025 to July 29th, 2025.

By Parallel

July 31, 2025