# State of the Art Deep Research APIs

Parallel Task API processors achieve state-of-the-art performance on BrowseComp[BrowseComp](https://openai.com/index/browsecomp/), a challenging benchmark built by OpenAI to test web search agents' deep research capabilities. Our best processor reaches 27% accuracy— higher than the accuracy achieved by humans given 2 hours per problem.

## The deep research challenge

BrowseComp represents a new class of research problems that resist conventional web search. Unlike simple fact retrieval, these 1,266 questions require multi-hop reasoning across scattered sources, creative search reformulation when initial strategies fail, and synthesis of contextual clues spanning multiple time periods.

Consider this sample question:

Notes

"A piece of art was funded by a certain organization, according to an entry made on January 28, 2019. This piece of art belongs to an art form that has the support and acceptance of the local community, according to the organization's founder, as stated in a blog post from 2016. The artist who created the piece works under an alias, faced tough challenges growing up, features circles in their work often, and is fascinated by human behavior, according to another entry posted by the same organization from 2012. What's the title of the entry from 2019, as it appears on the organization's website?"

Human experts solve only about 25% of these questions correctly within two hours. While esoteric, they mirror critical business challenges that demand sophisticated needle-in-haystack capabilities: connecting regulatory filings across time periods for due diligence, synthesizing competitive intelligence from fragmented sources, tracking supply chain dependencies through multiple corporate layers, or conducting comprehensive background research where a single overlooked detail can derail major decisions.

These are the research tasks that matter most to organizations—complex, multi-faceted investigations that traditional search tools handle poorly but that can make or break strategic initiatives.

## State of the art results

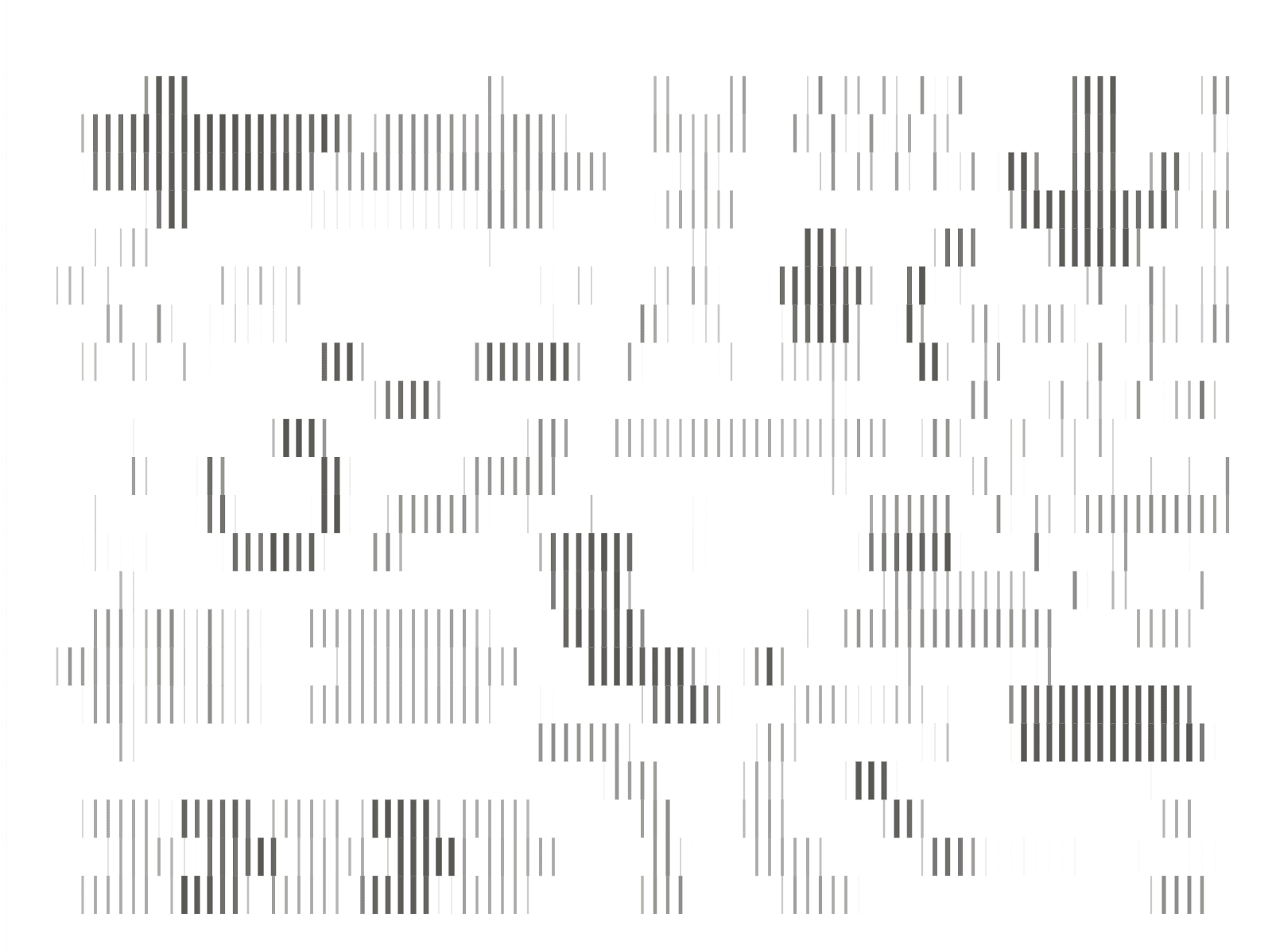

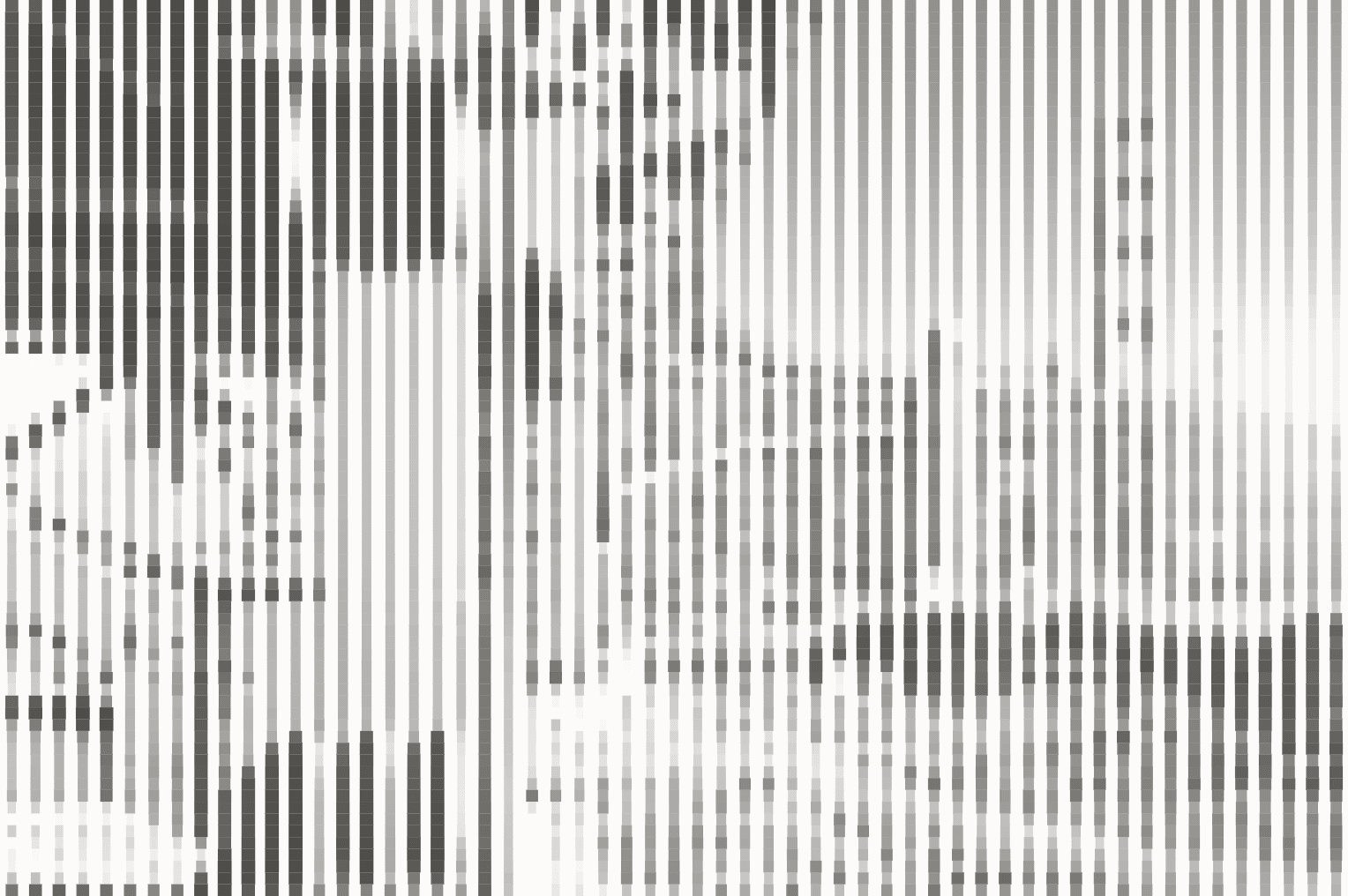

Parallel Task API processors outperform human experts and all commercially available web search and deep research APIs on BrowseComp, while being significantly cheaper.

CPM: USD per 1000 requests. Cost is shown on a Linear scale.

### About the benchmark

This benchmark, created by OpenAI, contains 1,266 questions requiring multi-hop reasoning, creative search formulation, and synthesis of contextual clues across time periods. Read our blog here[here](/blog/deep-research).

### Steps of reasoning

100% Multi-Hop questions

### Parallel 600/1200

Parallel 600 and 1200 are agents with the same architecture as Parallel Ultra (which costs 300 USD for 1000 queries), but with 2x and 4x the compute and cost.

### BrowseComp Scaled Compute Benchmark

| Series | Model | Cost (CPM) | Accuracy (%) | | --------- | ------------------------- | ---------- | ------------- | | Parallel | Base | 10 | 4 | | Parallel | Core | 25 | 7 | | Parallel | Pro | 100 | 17 | | Parallel | Ultra | 300 | 27 | | Parallel | Parallel 600 | 600 | 39 | | Parallel | Parallel 1200 | 1200 | 48 | | Others | GPT-4.1 w/ browsing | 53 | 1 | | Others | Claude Sonnet 4 w/ search | 1168 | 6 | | Others | Exa Research | 275 | 14 | | Others | Perplexity Deep Research | 880 | 8 |

CPM: USD per 1000 requests. Cost is shown on a Linear scale.

### About the benchmark

This benchmark, created by OpenAI, contains 1,266 questions requiring multi-hop reasoning, creative search formulation, and synthesis of contextual clues across time periods. Read our blog here[here](/blog/deep-research).

### Steps of reasoning

100% Multi-Hop questions

### Parallel 600/1200

Parallel 600 and 1200 are agents with the same architecture as Parallel Ultra (which costs 300 USD for 1000 queries), but with 2x and 4x the compute and cost.

Parallel-ultra establishes new state-of-the-art accuracy while remaining cost-efficient and our other processors complete the curve to establish the highest accuracy at each price point. This extends our track record from SimpleQA and WISER-Atomic[SimpleQA and WISER-Atomic](/blog/parallel-task-api), demonstrating consistent leadership as research challenges scale from single-hop to complex multi-hop scenarios across a wide range of price points.

OpenAI has published SOTA accuracy of 51.5% for their Deep Research Agent - trained on browse-comp tasks. This was achieved at an undisclosed computation shown on an exponential scale and isn’t available for API use. Since we’ve built our system to be able to optimize performance based on budgets for computation and retrieval, we were able to test our system at a budget level far beyond our Ultra processor with no changes to the underlying architecture. We observe (1) accuracy improves consistently with budget and (2) we were able to achieve 48% accuracy, without any optimization or fine-tuning on the dataset’s distribution. The implications extend beyond benchmarks: our customers can dial up performance for critical tasks or dial down performance for routine queries, providing flexibility unavailable in specialized systems.

## **Build with Parallel deep research**

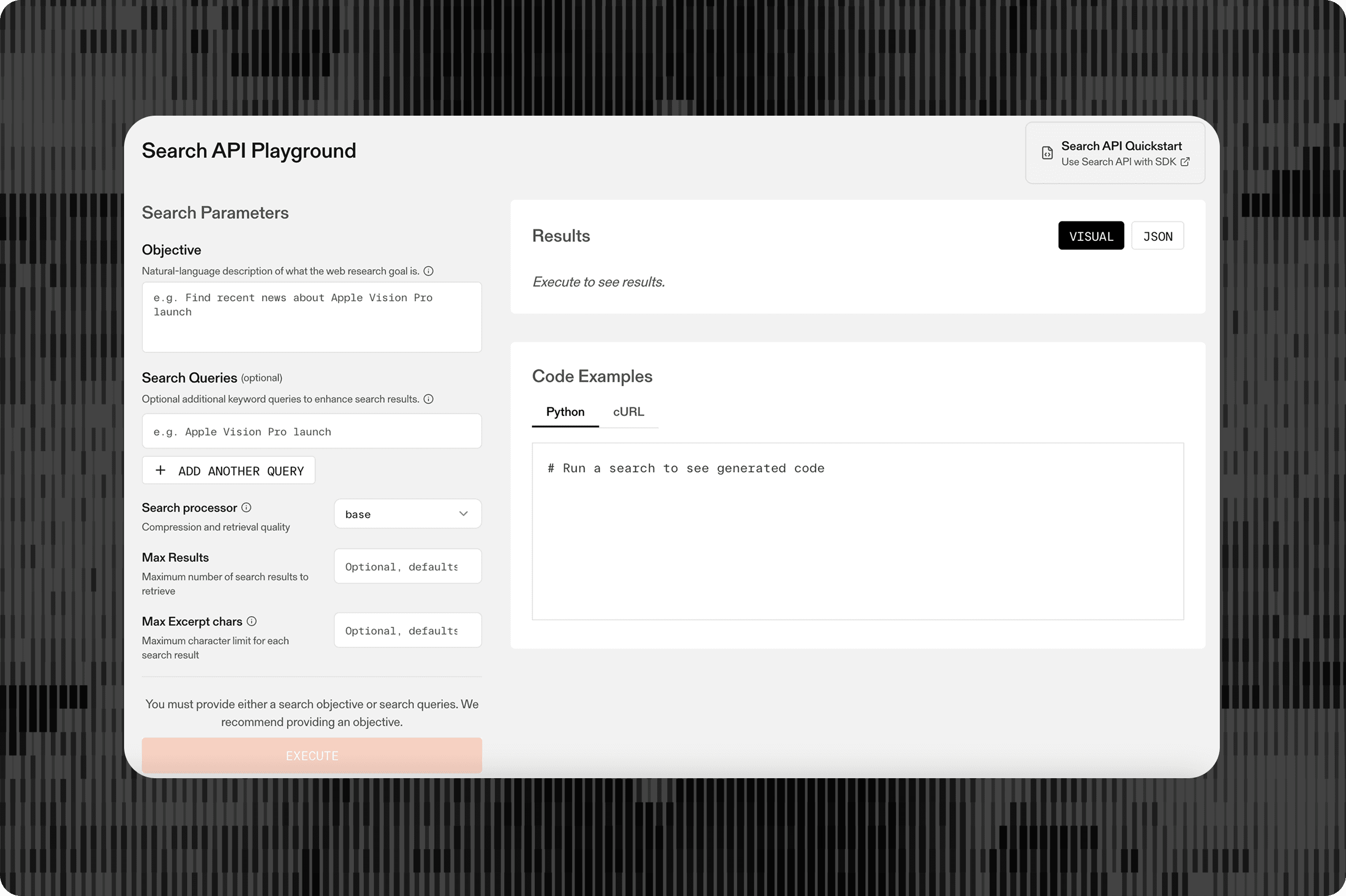

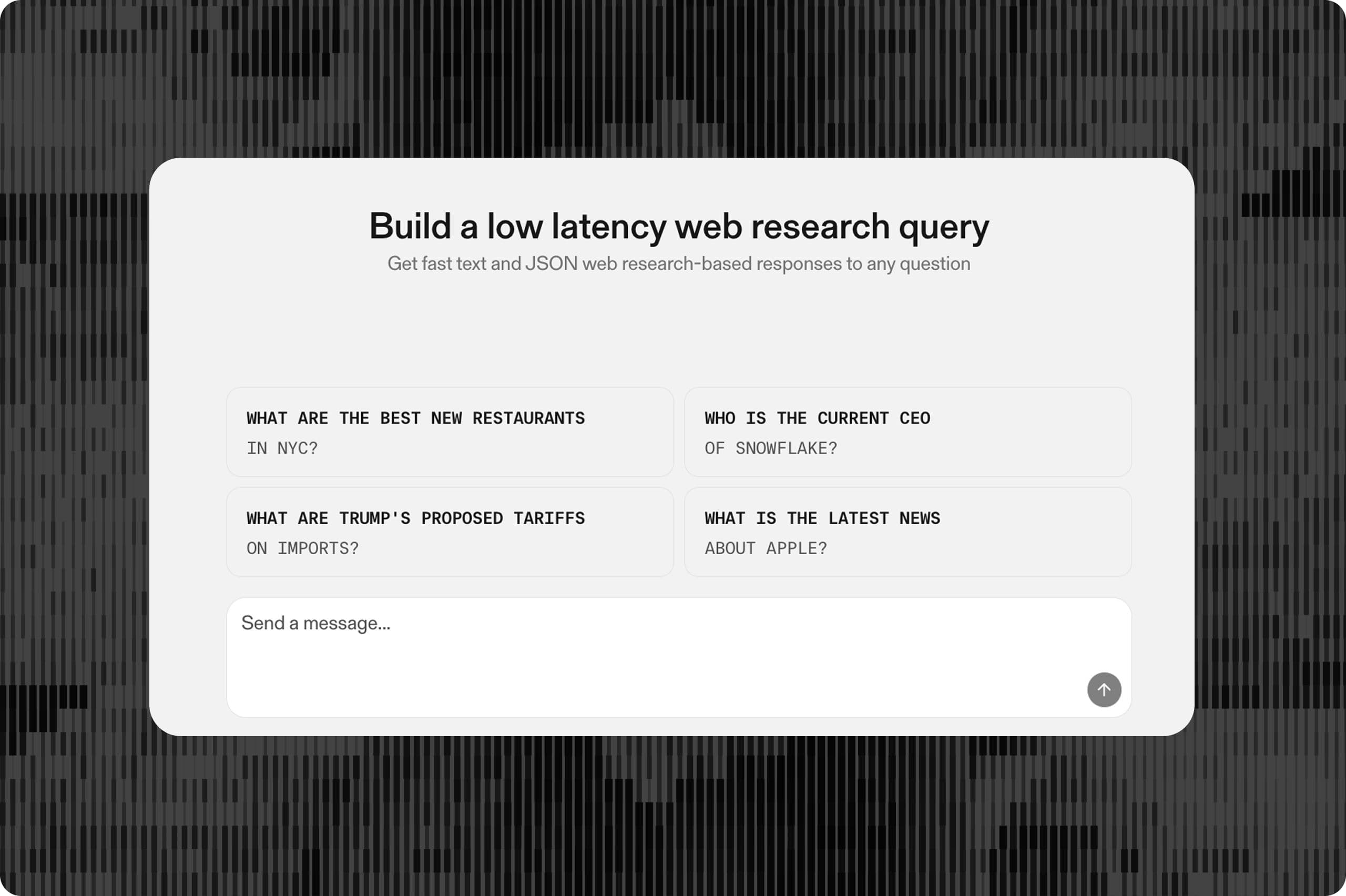

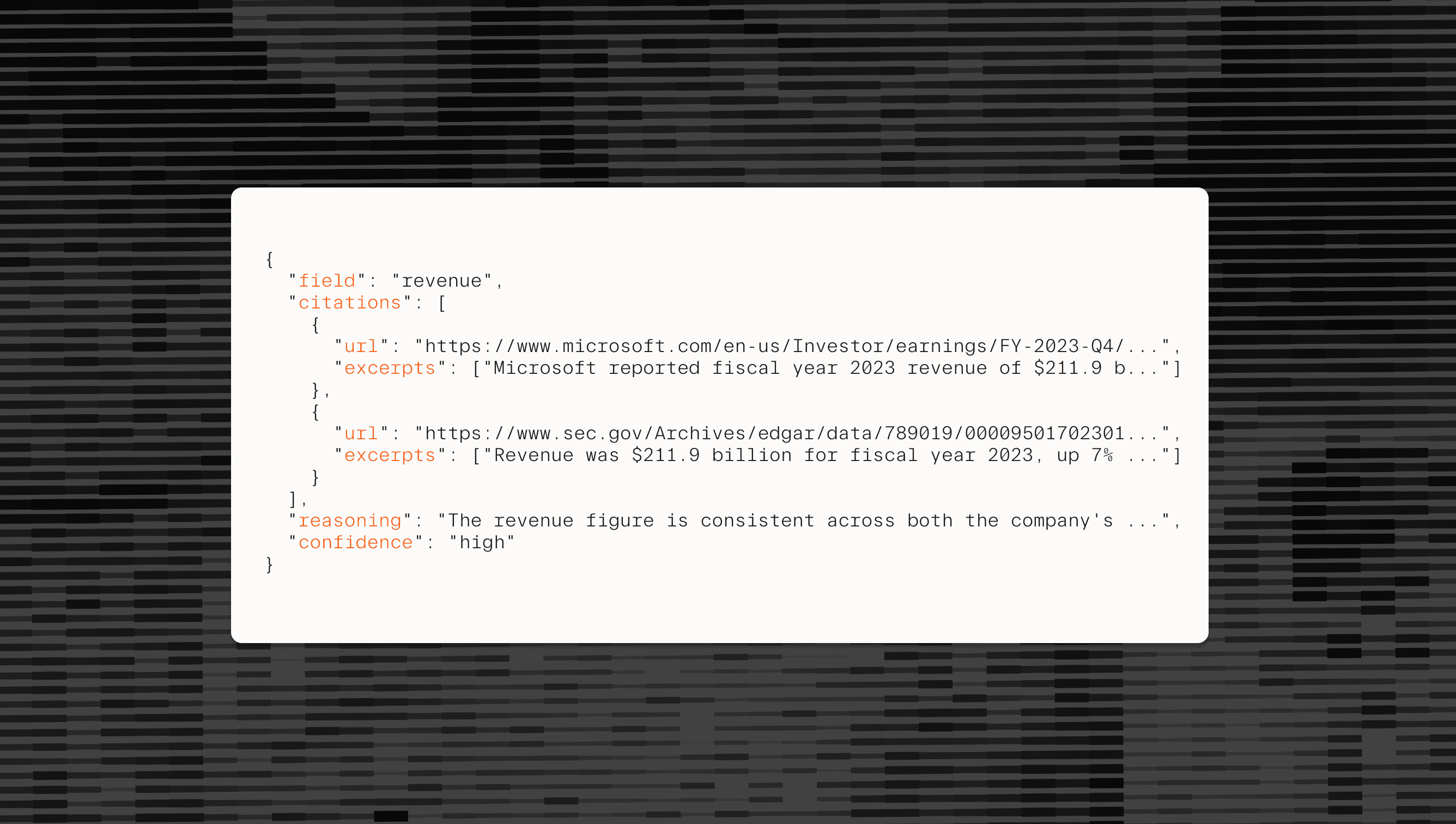

Get started building with the Parallel Task API pro and ultra processors in our Developer Platform[Developer Platform](https://platform.parallel.ai/home) or dive directly into our documentation[documentation](https://docs.parallel.ai/task-api/task-deep-research).

1234567891011121314from parallel import Parallel

# Initialize the Parallel client

client = Parallel(api_key="your-api-key-here")

# Execute the task run (blocking)

run_result = client.task_run.execute(

input="Company",

output="Top adverse media, top risk factors, sample of customers,top competitors and their price/features/messaging",

processor="ultra"

)

print(run_result)

``` from parallel import Parallel # Initialize the Parallel clientclient = Parallel(api_key="your-api-key-here") # Execute the task run (blocking)run_result = client.task_run.execute( input="Company", output="Top adverse media, top risk factors, sample of customers,top competitors and their price/features/messaging", processor="ultra")print(run_result) ``` ## **Notes on Methodology**

Benchmark Details: All benchmarks were run on a random 100 question subset of the original dataset, which was kept constant across experiments with our own agents and those of competitors.

LLM Evaluator: The agents’ responses were compared against the ground truth using the same standard LLM evaluator and evaluation criteria.

Benchmark Dates: All tests were conducted between Jun 10 and Jun 12, 2025.

By Parallel

June 17, 2025